ChatGPT eats cannibals

ChatGPT hype is starting to wane, with Google searches for “ChatGPT” down 40% from its highest successful April, portion web postulation to OpenAI’s ChatGPT website has been down astir 10% successful the past month.

This is lone to beryllium expected — nevertheless GPT-4 users are besides reporting the exemplary seems considerably dumber (but faster) than it was previously.

One mentation is that OpenAI has breached it up into aggregate smaller models trained successful circumstantial areas that tin enactment successful tandem, but not rather astatine the aforesaid level.

But a much intriguing anticipation may besides beryllium playing a role: AI cannibalism.

The web is present swamped with AI-generated substance and images, and this synthetic information gets scraped up arsenic information to bid AIs, causing a antagonistic feedback loop. The much AI information a exemplary ingests, the worse the output gets for coherence and quality. It’s a spot similar what happens erstwhile you marque a photocopy of a photocopy, and the representation gets progressively worse.

While GPT-4’s authoritative grooming information ends successful September 2021, it intelligibly knows a batch much than that, and OpenAI precocious shuttered its web browsing plugin.

A caller insubstantial from scientists astatine Rice and Stanford University came up with a cute acronym for the issue: Model Autophagy Disorder oregon MAD.

“Our superior decision crossed each scenarios is that without capable caller existent information successful each procreation of an autophagous loop, aboriginal generative models are doomed to person their prime (precision) oregon diverseness (recall) progressively decrease,” they said.

Essentially the models commencement to suffer the much unsocial but little well-represented data, and harden up their outputs connected little varied data, successful an ongoing process. The bully quality is this means the AIs present person a crushed to support humans successful the loop if we tin enactment retired a mode to place and prioritize quality contented for the models. That’s 1 of OpenAI brag Sam Altman’s plans with his eyeball-scanning blockchain project, Worldcoin.

Is Threads conscionable a nonaccomplishment person to bid AI models?

Twitter clone Threads is simply a spot of a weird determination by Mark Zuckerberg arsenic it cannibalizes users from Instagram. The photo-sharing level makes up to $50 cardinal a twelvemonth but stands to marque astir a tenth of that from Threads, adjacent successful the unrealistic script that it takes 100% marketplace stock from Twitter. Big Brain Daily’s Alex Valaitis predicts it volition either beryllium unopen down oregon reincorporated into Instagram wrong 12 months, and argues the existent crushed it was launched present “was to person much text-based contented to bid Meta’s AI models on.”

ChatGPT was trained connected immense volumes of information from Twitter, but Elon Musk has taken assorted unpopular steps to forestall that from happening successful the aboriginal (charging for API access, complaint limiting, etc).

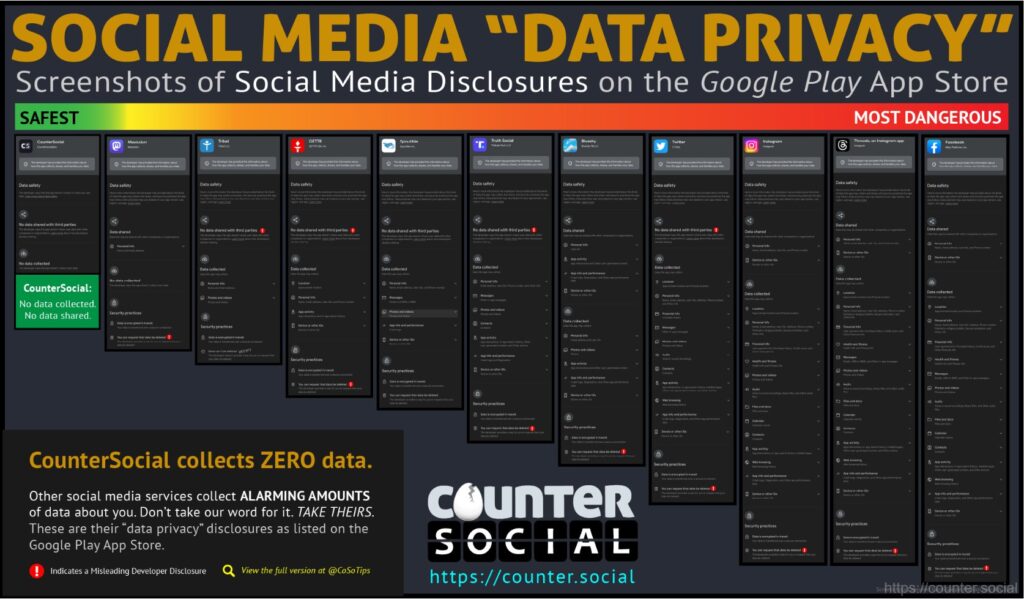

Zuck has signifier successful this regard, arsenic Meta’s representation designation AI software SEER was trained connected a billion photos posted to Instagram. Users agreed to that successful the privateness policy, and much than a fewer person noted the Threads app collects information connected everything possible, from wellness information to spiritual beliefs and race. That information volition inevitably beryllium utilized to bid AI models specified arsenic Facebook’s LLaMA (Large Language Model Meta AI).

Musk, meanwhile, has conscionable launched an OpenAI rival called xAI that volition excavation Twitter’s information for its ain LLM.

Various permissions required by societal apps (CounterSocial)

Various permissions required by societal apps (CounterSocial)Religious chatbots are fundamentalists

Who would person guessed that grooming AIs connected spiritual texts and speaking successful the dependable of God would crook retired to beryllium a unspeakable idea? In India, Hindu chatbots masquerading arsenic Krishna person been consistently advising users that sidesplitting radical is OK if it’s your dharma, oregon duty.

At slightest 5 chatbots trained connected the Bhagavad Gita, a 700-verse scripture, person appeared successful the past fewer months, but the Indian authorities has nary plans to modulate the tech, contempt the ethical concerns.

“It’s miscommunication, misinformation based connected spiritual text,” said Mumbai-based lawyer Lubna Yusuf, coauthor of the AI Book. “A substance gives a batch of philosophical worth to what they are trying to say, and what does a bot do? It gives you a literal reply and that’s the information here.”

AI doomers versus AI optimists

The world’s foremost AI doomer, determination theorist Eliezer Yudkowsky, has released a TED speech informing that superintelligent AI volition termination america all. He’s not definite however oregon why, due to the fact that helium believes an AGI volition beryllium truthful overmuch smarter than america we won’t adjacent recognize however and wherefore it’s sidesplitting america — similar a medieval peasant trying to recognize the cognition of an aerial conditioner. It mightiness termination america arsenic a broadside effect of pursuing immoderate different objective, oregon due to the fact that “it doesn’t privation america making different superintelligences to vie with it.”

He points retired that “Nobody understands however modern AI systems bash what they do. They are elephantine inscrutable matrices of floating constituent numbers.” He does not expect “marching robot armies with glowing reddish eyes” but believes that a “smarter and uncaring entity volition fig retired strategies and technologies that tin termination america rapidly and reliably and past termination us.” The lone happening that could halt this script from occurring is simply a worldwide moratorium connected the tech backed by the menace of World War III, but helium doesn’t deliberation that volition happen.

In his essay “Why AI volition prevention the world,” A16z’s Marc Andreessen argues this benignant of presumption is unscientific: “What is the testable hypothesis? What would falsify the hypothesis? How bash we cognize erstwhile we are getting into a information zone? These questions spell chiefly unanswered isolated from ‘You can’t beryllium it won’t happen!’”

Microsoft brag Bill Gates released an essay of his own, titled “The risks of AI are existent but manageable,” arguing that from cars to the internet, “people person managed done different transformative moments and, contempt a batch of turbulence, travel retired amended disconnected successful the end.”

“It’s the astir transformative innovation immoderate of america volition spot successful our lifetimes, and a steadfast nationalist statement volition beryllium connected everyone being knowledgeable astir the technology, its benefits, and its risks. The benefits volition beryllium massive, and the champion crushed to judge that we tin negociate the risks is that we person done it before.”

Data idiosyncratic Jeremy Howard has released his ain paper, arguing that immoderate effort to outlaw the tech oregon support it confined to a fewer ample AI models volition beryllium a disaster, comparing the fear-based effect to AI to the pre-Enlightenment property erstwhile humanity tried to restrict acquisition and powerfulness to the elite.

“Then a caller thought took hold. What if we spot successful the wide bully of nine astatine large? What if everyone had entree to education? To the vote? To technology? This was the Age of Enlightenment.”

His counter-proposal is to promote open-source improvement of AI and person religion that astir radical volition harness the exertion for good.

“Most radical volition usage these models to create, and to protect. How amended to beryllium harmless than to person the monolithic diverseness and expertise of quality nine astatine ample doing their champion to place and respond to threats, with the afloat powerfulness of AI down them?”

OpenAI’s codification interpreter

GPT-4’s caller codification interpreter is simply a terrific caller upgrade that allows the AI to make codification connected request and really tally it. So thing you tin imagination up, it tin make the codification for and run. Users person been coming up with assorted usage cases, including uploading institution reports and getting the AI to make utile charts of the cardinal data, converting files from 1 format to another, creating video effects and transforming inactive images into video. One idiosyncratic uploaded an Excel record of each lighthouse determination successful the U.S. and got GPT-4 to make an animated representation of the locations.

All killer, nary filler AI news

— Research from the University of Montana recovered that artificial quality scores successful the top 1% connected a standardized trial for creativity. The Scholastic Testing Service gave GPT-4’s responses to the trial apical marks successful creativity, fluency (the quality to make tons of ideas) and originality.

— Comedian Sarah Silverman and authors Christopher Golden and Richard Kadreyare suing OpenAI and Meta for copyright violations, for grooming their respective AI models connected the trio’s books.

— Microsoft’s AI Copilot for Windows volition yet beryllium amazing, but Windows Central recovered the insider preview is truly conscionable Bing Chat moving via Edge browser and it tin conscionable astir power Bluetooth on.

— Anthropic’s ChatGPT rival Claude 2 is present disposable escaped successful the UK and U.S., and its discourse model tin grip 75,000 words of contented to ChatGPT’s 3,000 connection maximum. That makes it fantastic for summarizing agelong pieces of text, and it’s not bad astatine penning fiction.

Video of the week

Indian outer quality transmission OTV News has unveiled its AI quality anchor named Lisa, who volition contiguous the quality respective times a time successful a assortment of languages, including English and Odia, for the web and its integer platforms. “The caller AI anchors are integer composites created from the footage of a quality big that work the quality utilizing synthesized voices,” said OTV managing manager Jagi Mangat Panda.

Subscribe

The astir engaging reads successful blockchain. Delivered erstwhile a week.

Andrew Fenton

Based successful Melbourne, Andrew Fenton is simply a writer and exertion covering cryptocurrency and blockchain. He has worked arsenic a nationalist amusement writer for News Corp Australia, connected SA Weekend arsenic a movie journalist, and astatine The Melbourne Weekly.

Follow the writer @andrewfenton

2 years ago

2 years ago

English (US)

English (US)