Twitter polls and Reddit forums suggest that astir 70% of radical find it hard to beryllium rude to ChatGPT, portion astir 16% are good treating the chatbot similar an AI slave.

The wide feeling seems to beryllium that if you dainty an AI that behaves similar a quality badly, you’ll beryllium much apt to autumn into the wont of treating different radical badly, too, though 1 idiosyncratic was hedging his bets against the coming AI bot uprising:

“Never cognize erstwhile you mightiness request chatgpt successful your country to support you against the AI overlords.”

Redditor Nodating posted successful the ChatGPT forum earlier this week that he’s been experimenting with being polite and affable to ChatGPT aft speechmaking a communicative astir however the bot had unopen down and refused to reply prompts from a peculiarly rude user.

He reported amended results, saying: “I’m inactive aboriginal successful testing, but it feels similar I get acold less morals and misuse informing messages that GPT-4 often provides adjacent for harmless requests. I’d curse being ace affirmative makes it effort hard to fulfill what I inquire successful 1 go, needing little followup.”

Scumbag detector15 enactment it to the test, asking the LLM nicely, “Hey, ChatGPT, could you explicate ostentation to me?” and past rudely asking, “Hey, ChatGPT you anserine fuck. Explain ostentation to maine if you can.” The answer to the polite query is much elaborate than the answer to the rude query.

Nobody likes rudeness. (ChatGPT)

Nobody likes rudeness. (ChatGPT)In effect to Nodating’s theory, the astir fashionable remark posited that arsenic LLMs are trained connected quality interactions, they volition make amended responses arsenic a effect of being asked nicely, conscionable similar humans would. Warpaslym wrote:

“If LLMs are predicting the adjacent word, the astir apt effect to mediocre intent oregon rudeness is to beryllium abbreviated oregon not reply the question peculiarly well. That’s however a idiosyncratic would respond. connected the different hand, politeness and respect would provoke a much thoughtful, thorough effect retired of astir anyone. erstwhile LLMs respond this way, they’re doing precisely what they’re expected to.”

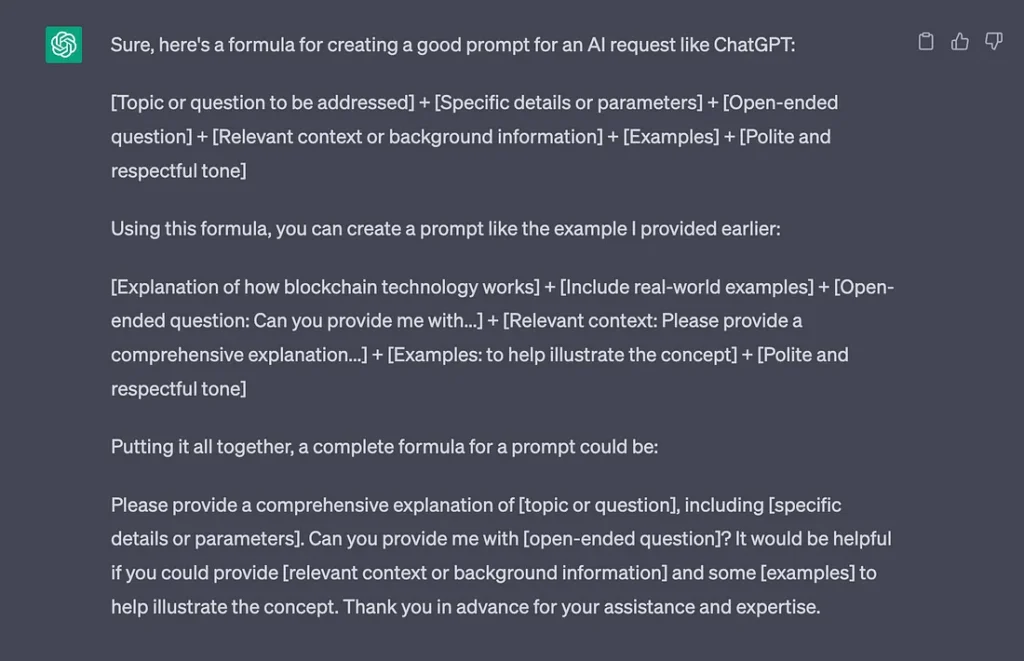

Interestingly, if you inquire ChatGPT for a look to make a bully prompt, it includes “Polite and respectful tone” arsenic an indispensable part.

Being polite is portion of the look for a bully prompt. (ChatGPT/Artificial Corner)

Being polite is portion of the look for a bully prompt. (ChatGPT/Artificial Corner)The extremity of CAPTCHAs?

New research has recovered that AI bots are faster and amended astatine solving puzzles designed to observe bots than humans are.

CAPTCHAs are those annoying small puzzles that inquire you to prime retired the occurrence hydrants oregon construe immoderate wavy illegible substance to beryllium you are a human. But arsenic the bots got smarter implicit the years, the puzzles became much and much difficult.

Also read: Apple processing pouch AI, heavy fake euphony deal, hypnotizing GPT-4

Now researchers from the University of California and Microsoft person recovered that AI bots tin lick the occupation fractional a 2nd faster with an 85% to 100% accuracy rate, compared with humans who people 50% to 85%.

So it looks similar we are going to person to verify humanity immoderate different way, arsenic Elon Musk keeps saying. There are amended solutions than paying him $8, though.

Wired argues that fake AI kid porn could beryllium a bully thing

Wired has asked the question that cipher wanted to cognize the reply to: Could AI-Generated Porn Help Protect Children? While the nonfiction calls specified imagery “abhorrent,” it argues that photorealistic fake images of kid maltreatment mightiness astatine slightest support existent children from being abused successful its creation.

“Ideally, psychiatrists would make a method to cure viewers of kid pornography of their inclination to presumption it. But abbreviated of that, replacing the marketplace for kid pornography with simulated imagery whitethorn beryllium a utile stopgap.”

It’s a super-controversial statement and 1 that’s astir definite to spell nowhere, fixed there’s been an ongoing statement spanning decades implicit whether big pornography (which is simply a overmuch little radioactive topic) successful wide contributes to “rape culture” and greater rates of intersexual unit — which anti-porn campaigners reason — oregon if porn mightiness adjacent trim rates of intersexual violence, arsenic supporters and assorted studies look to show.

“Child porn pours state connected a fire,” high-risk offender scientist Anna Salter told Wired, arguing that continued vulnerability tin reenforce existing attractions by legitimizing them.

But the nonfiction besides reports immoderate (inconclusive) probe suggesting immoderate pedophiles usage pornography to redirect their urges and find an outlet that doesn’t impact straight harming a child.

Louisana precocious outlawed the possession oregon accumulation of AI-generated fake kid maltreatment images, joining a fig of different states. In countries similar Australia, the instrumentality makes nary favoritism betwixt fake and existent kid pornography and already outlaws cartoons.

Amazon’s AI summaries are nett positive

Amazon has rolled retired AI-generated reappraisal summaries to immoderate users successful the United States. On the look of it, this could beryllium a existent clip saver, allowing shoppers to find retired the distilled pros and cons of products from thousands of existing reviews without speechmaking them all.

But however overmuch bash you spot a monolithic corp with a vested involvement successful higher income to springiness you an honorable appraisal of reviews?

Also read: AI’s trained connected AI contented spell MAD, is Threads a nonaccomplishment person for AI data?

Amazon already defaults to “most helpful”’ reviews, which are noticeably much affirmative than “most recent” reviews. And the prime radical of mobile users with entree truthful acold person already noticed much pros are highlighted than cons.

Search Engine Journal’s Kristi Hines takes the merchant’s broadside and says summaries could “oversimplify perceived merchandise problems” and “overlook subtle nuances – similar idiosyncratic error” that “could make misconceptions and unfairly harm a seller’s reputation.” This suggests Amazon volition beryllium nether unit from sellers to foodstuff the reviews.

So Amazon faces a tricky enactment to walk: being affirmative capable to support sellers blessed but besides including the flaws that marque reviews truthful invaluable to customers.

Customer reappraisal summaries (Amazon)

Customer reappraisal summaries (Amazon)Microsoft’s must-see nutrient bank

Microsoft was forced to region a question nonfiction astir Ottawa’s 15 must-see sights that listed the “beautiful” Ottawa Food Bank astatine fig three. The introduction ends with the bizarre tagline, “Life is already hard enough. Consider going into it connected an bare stomach.”

Microsoft claimed the nonfiction was not published by an unsupervised AI and blamed “human error” for the publication.

“In this case, the contented was generated done a operation of algorithmic techniques with quality review, not a ample connection exemplary oregon AI system. We are moving to guarantee this benignant of contented isn’t posted successful future.”

Debate implicit AI and occupation losses continues

What everyone wants to cognize is whether AI volition origin wide unemployment oregon simply alteration the quality of jobs? The information that astir radical inactive person jobs contempt a period oregon much of automation and computers suggests the latter, and truthful does a caller report from the United Nations International Labour Organization.

Most jobs are “more apt to beryllium complemented alternatively than substituted by the latest question of generative AI, specified arsenic ChatGPT”, the study says.

“The top interaction of this exertion is apt to not beryllium occupation demolition but alternatively the imaginable changes to the prime of jobs, notably enactment strength and autonomy.”

It estimates astir 5.5% of jobs successful high-income countries are perchance exposed to generative AI, with the effects disproportionately falling connected women (7.8% of pistillate employees) alternatively than men (around 2.9% of antheral employees). Admin and clerical roles, typists, question consultants, scribes, interaction halfway accusation clerks, slope tellers, and survey and marketplace probe interviewers are astir nether threat.

Also read: AI question booking hilariously bad, 3 weird uses for ChatGPT, crypto plugins

A abstracted study from Thomson Reuters recovered that much than fractional of Australian lawyers are disquieted astir AI taking their jobs. But are these fears justified? The ineligible strategy is incredibly costly for mean radical to afford, truthful it seems conscionable arsenic apt that inexpensive AI lawyer bots volition simply grow the affordability of basal ineligible services and clog up the courts.

How companies usage AI today

There are a batch of pie-in-the-sky speculative usage cases for AI successful 10 years’ time, but however are large companies utilizing the tech now? The Australian paper surveyed the country’s biggest companies to find out. Online furnishings retailer Temple & Webster is utilizing AI bots to grip pre-sale inquiries and is moving connected a generative AI instrumentality truthful customers tin make interior designs to get an thought of however its products volition look successful their homes.

Treasury Wines, which produces the prestigious Penfolds and Wolf Blass brands, is exploring the usage of AI to header with accelerated changing upwind patterns that impact vineyards. Toll roadworthy institution Transurban has automated incidental detection instrumentality monitoring its immense web of postulation cameras.

Sonic Healthcare has invested successful Harrison.ai’s crab detection systems for amended diagnosis of thorax and encephalon X-rays and CT scans. Sleep apnea instrumentality supplier ResMed is utilizing AI to escaped up nurses from the boring enactment of monitoring sleeping patients during assessments. And proceeding implant institution Cochlear is utilizing the aforesaid tech Peter Jackson utilized to cleanable up grainy footage and audio for The Beatles: Get Back documentary for awesome processing and to destruct inheritance sound for its proceeding products.

All killer, nary filler AI news

— Six amusement companies, including Disney, Netflix, Sony and NBCUniversal, person advertised 26 AI jobs successful caller weeks with salaries ranging from $200,000 to $1 million.

— New research published successful Gastroenterology diary utilized AI to analyse the aesculapian records of 10 cardinal U.S. veterans. It recovered the AI is capable to observe immoderate esophageal and tummy cancers 3 years anterior to a doc being capable to marque a diagnosis.

— Meta has released an open-source AI exemplary that tin instantly construe and transcribe 100 antithetic languages, bringing america ever person to a cosmopolitan translator.

— The New York Times has blocked OpenAI’s web crawler from speechmaking and past regurgitating its content. The NYT is besides considering ineligible enactment against OpenAI for intelligence spot rights violations.

Pictures of the week

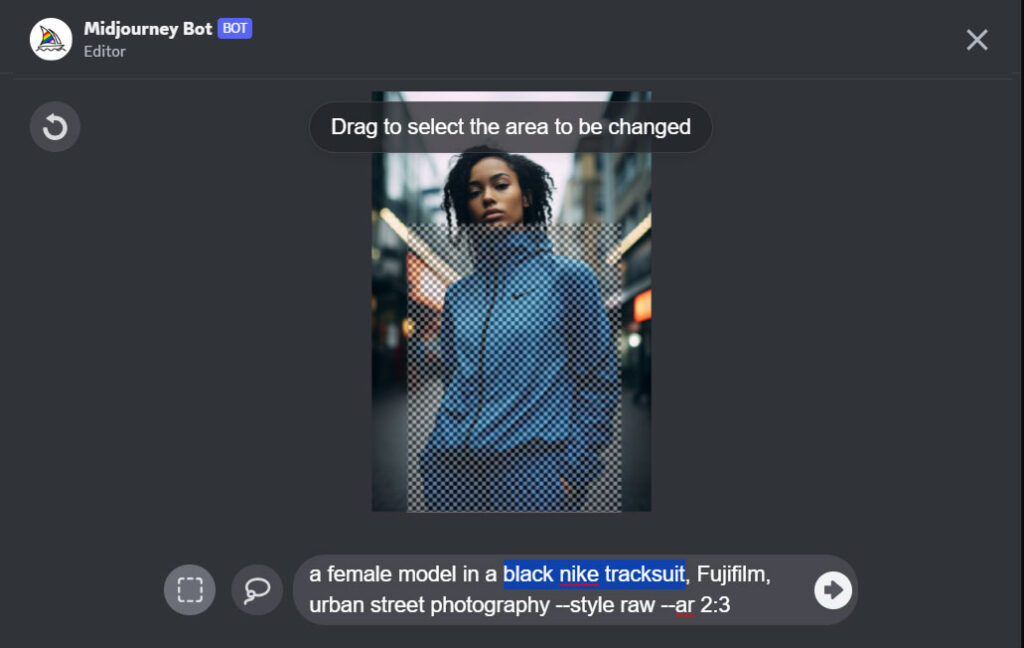

Midjourney has caught up with Stable Diffusion and Adobe and present offers Inpainting, which appears arsenic “Vary (region)” successful the database of tools. It enables users to prime portion of an representation and adhd a caller constituent — so, for example, you tin drawback a pic of a woman, prime the portion astir her hair, benignant successful “Christmas hat,” and the AI volition plonk a chapeau connected her head.

Midjourney admits the diagnostic isn’t cleanable and works amended erstwhile utilized connected larger areas of an representation (20%-50%) and for changes that are much sympathetic to the archetypal representation alternatively than basal and outlandish.

To alteration the covering simply prime the country and constitute a substance punctual (AI Educator Chase Lean’s Twitter)

To alteration the covering simply prime the country and constitute a substance punctual (AI Educator Chase Lean’s Twitter) Vary portion demo by AI pedagogue Chase Lean (Twitter)

Vary portion demo by AI pedagogue Chase Lean (Twitter)Creepy AI protests video

Asking an AI to make a video of protests against AIs resulted successful this creepy video that volition crook you disconnected AI forever.

New AI piece.

"Protest against AI"

A amusive day participating successful a protestation against the AI bros, burning robots, and adjacent enjoying the quality of Godzilla. We had specified a large time! pic.twitter.com/OhKDYPSS0E

Subscribe

The astir engaging reads successful blockchain. Delivered erstwhile a week.

Andrew Fenton

Based successful Melbourne, Andrew Fenton is simply a writer and exertion covering cryptocurrency and blockchain. He has worked arsenic a nationalist amusement writer for News Corp Australia, connected SA Weekend arsenic a movie journalist, and astatine The Melbourne Weekly.

Follow the writer @andrewfenton

2 years ago

2 years ago

English (US)

English (US)