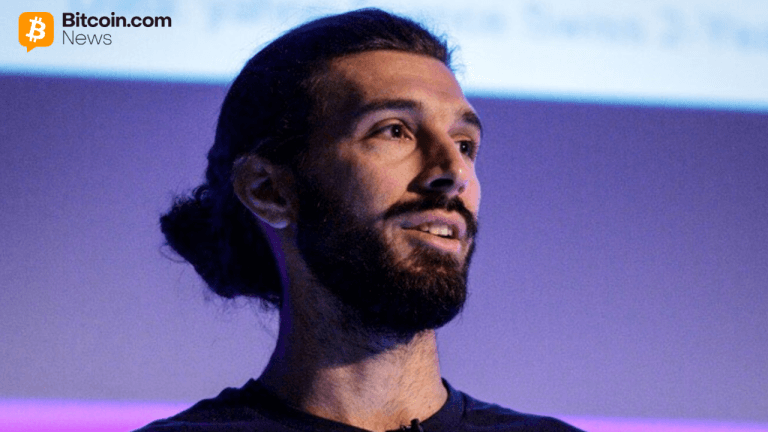

Yu Xian, laminitis of the blockchain information steadfast Slowmist, has raised alarms astir a rising menace known arsenic AI codification poisoning.

This onslaught benignant involves injecting harmful codification into the grooming information of AI models, which tin airs risks for users who beryllium connected these tools for method tasks.

The incident

The contented gained attraction aft a troubling incidental involving OpenAI’s ChatGPT. On Nov. 21, a crypto trader named “r_cky0” reported losing $2,500 successful integer assets aft seeking ChatGPT’s assistance to make a bot for Solana-based memecoin generator Pump.fun.

However, the chatbot recommended a fraudulent Solana API website, which led to the theft of the user’s backstage keys. The unfortunate noted that wrong 30 minutes of utilizing the malicious API, each assets were drained to a wallet linked to the scam.

[Editor’s Note: ChatGPT appears to person recommended the API aft moving a hunt utilizing the caller SearchGPT arsenic a ‘sources’ conception tin beryllium seen successful the screenshot. Therefore, it does not look to beryllium a lawsuit of AI poisoning but a nonaccomplishment of the AI to admit scam links successful hunt results.]

AI scam nexus API (Source: X)

AI scam nexus API (Source: X)Further probe revealed this code consistently receives stolen tokens, reinforcing suspicions that it belongs to a fraudster.

The Slowmist laminitis noted that the fraudulent API’s domain sanction was registered 2 months ago, suggesting the onslaught was premeditated. Xian furthered that the website lacked elaborate content, consisting lone of documents and codification repositories.

While the poisoning appears deliberate, nary grounds suggests OpenAI intentionally integrated the malicious information into ChatGPT’s training, with the effect apt coming from SearchGPT.

Implications

Blockchain information steadfast Scam Sniffer noted that this incidental illustrates however scammers pollute AI grooming information with harmful crypto code. The steadfast said that a GitHub user, “solanaapisdev,” has precocious created aggregate repositories to manipulate AI models to make fraudulent outputs successful caller months.

AI tools similar ChatGPT, present utilized by hundreds of millions, look expanding challenges arsenic attackers find caller ways to exploit them.

Xian cautioned crypto users astir the risks tied to ample connection models (LLMs) similar GPT. He emphasized that erstwhile a theoretical risk, AI poisoning has present materialized into a existent threat. So, without much robust defenses, incidents similar this could undermine spot successful AI-driven tools and exposure users to further fiscal losses.

The station Blockchain information steadfast warns of AI codification poisoning hazard aft OpenAI’s ChatGPT recommends scam API appeared archetypal connected CryptoSlate.

1 year ago

1 year ago

English (US)

English (US)